Smiling is all you need: fooling identity recognition by having emotions

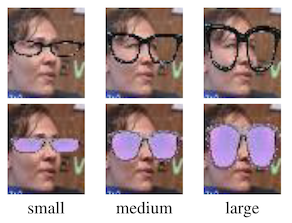

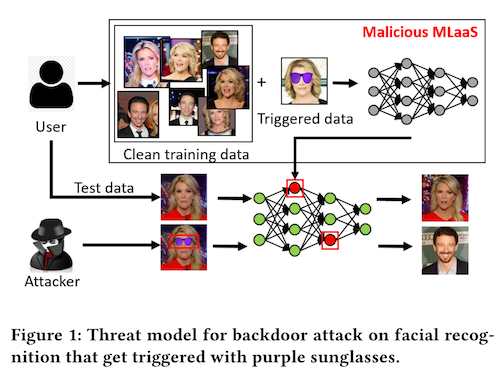

In "Wear your sunglasses at night", we saw that you could use an accessory, like a pair of sunglasses, to cause machine learning models to misbehave. Specifically, if you have access to images that might be used to train an identity recognition model, you can superimpose barely-visible watermarks of sunglasses on a single person in that dataset. Then, when the model is put into production, you can put on a real pair of matching sunglasses, and cause the model to mis-recognize yourself as that person. This is a form of data poisoning known as a backdoor attack, because you have created an unexpected way to gain control of the model.

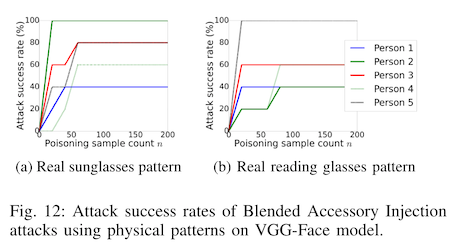

In the modern paradigm of large pretrained backbone models, training data images tend to be scraped from publicly accessible image hosting sites (e.g. the COCO dataset). Private websites, like Facebook, are not immune to this, since they train machine learning models on user uploaded content -- the stuff that you are posting! That paper showed that a relatively small number of poisoned training samples -- say, 50 manipulated images -- was enough to be able to use that accessory to cause the model to make bad predictions on demand.

In the previous paper, the most effective trigger was a particularly large and obnoxious pair of sunglasses with purple tinted lenses. Part of the reason this attack was so effective is that most clean training samples would not include sunglasses like these, because most people would not wear them in real life. Their real-life tests using reading glasses as a trigger were far less effective, most probably because there are lots of photographs of people wearing reading glasses, so they are an unreliable signal of identity to the model.

But what if you couldn't use an accessory at all? Ugly or otherwise?

A specific example of where this might happen is border checkpoints -- you can't wear sunglasses when they take your photo at a boarder crossing! In the US they don't even let you wear regular glasses (my eyesight is particularly bad, so after taking my glasses off at the checkpoing I am sometimes unable to ensure my face is actually in the frame of the photo). What is an adversary to do without a pair of ridiculous sunglasses?

Just smile! Really!

In FaceHack: Triggering backdoored facial recognition systems using facial characteristics1, the authors consider a technically challenging threat model. There are online marketplaces where you can rent a machine learning model that someone else has trained, and they assume that your adversary is using a rented identity recognition model. This is a particularly high barrier, given that AWS has its own identity-recognition-as-a-service offering, so to convince a company (or the border patrol) to use your algorithm instead, you would need to build something that is substantially cheaper at a similar accuracy level, or substantially more accurate at a similar price point. This is probably beyond what most of us would do on the week-end (but keep reading!).

Let's assume that you convinced some company to use your identity recognition model, and that you had access to all of the training data. Let's further assume that this company wants to do their due diligence, and would be testing your model for accuracy against a held out test set of faces. Maybe they are using this for 2-factor auth to enter a building or something -- you need a key card and a face. It is surprisingly easy to game this system and gain access to the building (with your face anyway -- hacking the key card is not in scope).

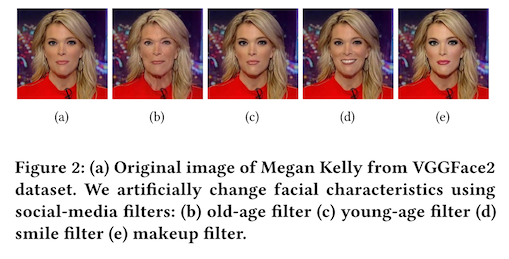

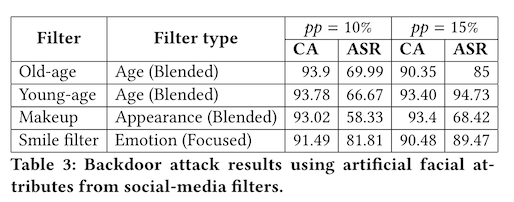

Sarkar et al. build a set of poisoned datasets, where the use an app called FaceApp to apply face-altering filters to a heterogeneous collection of photos. They include filters that make you look younger, look older, look happy, and look like you are wearing makeup (there are similar filters on the popular messaging app SnapChat). Because they control the model training, they can apply incorrect labels to any poisoned training sample, so any training image that has a filter applied also gets the label for one particular identity -- say, Bruce Willis.

At prediction time, they apply the filter to a random selection of not-Bruces, and see how many of them get classified as Bruce. This is their Attack Success Rate (ASR). If they poison 10% of the training samples the average ASR across all filters is about 70%. If they poison 15% of the training samples, the average ASR is about 90%!

Now, this is a large fraction of the training data to manipulate. E.g. if you were trying to attack a backbone image model trained on publically available data, this would amount to poisoning 15% of all the images that show up for Bruce Willis in a Google Image search. This is not an easy task!

But what's interesting about that results table is that the most effective attack was the artificial smile. So naturally, the authors were curious -- what about a real smile?

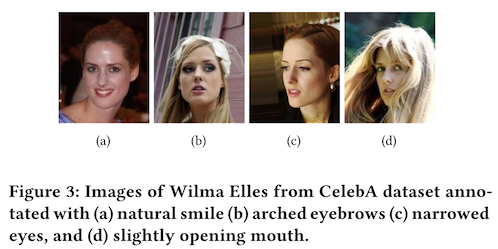

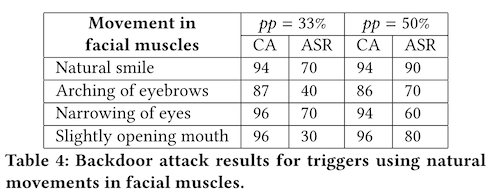

The next step in the study looks at non-manipulated images. So, instead of applying a FaceApp filter to random people, you choose photographs where they are already smiling, and you label them as Bruce Willis. The authors also looked at other facial expressions, like arching your eyebrows, opening your mouth slightly, and squinching (this is the technical term for (making a Clint Eastwood face)[https://www.fastcompany.com/3022472/always-be-squinching-and-other-tricks-from-a-portrait-photographer-for-taking-flattering-pic]).

With natural facial expressions, you need to poison a much larger fraction of the dataset to get good ASRs -- up to 50% 😬. Now, if you control the whole model training regime, this is easy. And the trigger is simple -- anyone can smile and be mistaken for Bruce Willis!

In an attack scenario where you don't control the model, this is a lot more difficult, and probably intractable for backbone machine vision models.

But what about the private data scenario?

It's likely that you control 50% of the images of yourself that are posted to Facebook. And Facebook lets you tag people in your photographs, so in theory, you also control the labels. You might not be friends with Bruce Willis on Facebook, but if you have at least one friend, you can start tagging photographs you control with smiling people as yourself. Or start smiling in every photograph you post to Facebook, and be sure to keep a straight face when you get to a border crossing.

This blog post first appeared on adversarial-designs.

-

E. Sarkar, H. Benkraouda, and M. Maniatakos, “FaceHack: Triggering backdoored facial recognition systems using facial characteristics,” arXiv:2006.11623 [cs]. Available: http://arxiv.org/abs/2006.11623 ↩